Here at New Tactics in Human Rights, one of our guiding principles is “adapting to innovate.” This means we push the limits of what is believed to be possible to move human rights forward. Our program exists to connect human rights defenders with creative, flexible, and unique solutions – new tactics to improve your advocacy.

We recognize that in the ever-evolving landscape of human rights activism, technology holds immense power to make positive change. Artificial Intelligence (AI) tools have the potential to foster more efficient workflows and enhance our human rights impact. However, this potential requires us to tread with caution. Understanding the ethical considerations around the development and use of AI systems is crucial for ensuring it doesn’t do harm to human rights. Read more on this in Part I of this series: The Human Rights Risks of Artificial Intelligence.

In Part II, we delve into more practical use cases for AI in the realm of human rights activism. We shed light on the potential benefits and pitfalls.

How AI Could Amplify Human Rights Efforts

Nonprofits, grassroots, civil society (CSOs), and nongovernmental organizations (NGOs) have long been at the forefront of advocating for justice and human rights. Some of us who work in these organizations may have started to experiment with tools like ChatGPT in our personal and professional lives. In a field where time and resources are limited, there might be ways for AI to amplify our efforts to protect and defend human rights. Here are a few ways activists and human rights defenders (HRDs) could utilize AI to their benefit:

AI as a Tool for Strategic Communications

One thing human rights defenders never have enough of is time. AI tools can produce ideas in an instant, reducing the time and resources needed to mobilize support and drive social change. In this sense it is a powerful tool for strategic communications in campaigns.

Often human rights campaigns include calls to action for individuals who might hold vastly different values and lived experiences than we do as organizers. For example, how can a climate justice campaign convince someone who doesn’t believe in climate change to understand and support the link between climate action and human rights? By harnessing the power of AI prompt engineering, advocates can better tailor our messaging to resonate more effectively with target audiences.

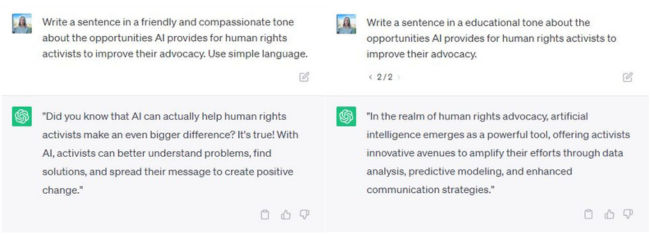

Prompt Engineering for Ideal Output

So, what exactly is prompt engineering? It’s the way that a user engages with a tool like ChatGPT. Specifically, it’s how the user gives the tool specific instruction and context to steer it to the response we want. Typically, the more specific your prompt, the better the output you will get. Here are some tips for framing your prompt to get the best output from a tool like ChatGPT:

- Describe the tone that you would like the tool to use. For example, ChatGPT could be instructed to use an empathetic and compassionate tone. It could show concern for the struggles of victims of violations. An urgent and action-oriented tone might motivate readers to join a cause. Conversely, an educational and informative tone could provide facts and evidence to improve understanding of an issue.

- Specify the desired reading level of your text. It can be beneficial for activists and HRDs to simplify complex concepts in order to make them easily comprehensible. For example, we can ask ChatGPT to explain legal jargon. Or we could ask it to draft policy recommendations, ensuring optimal readability for a variety of audiences.

- Tailor the length and depth of your text. It’s helpful to include the desired format of your messaging. Perhaps you are drafting text for a social media post, op-ed, or press release. Space in these types of communications is often limited. Carefully defining the parameters or word counts of the AI output can save time and effort in balancing the quality and quantity of information included.

- Incorporate cultural and linguistic nuance. Human rights issues vary widely across cultures and regions. And as we know, AI tools can serve to reinforce and amplify biases. Therefore, it can be helpful to specify how the tool could make the text relevant for a specific audience. For example, you could instruct ChatGPT to provide a story or example that would be relevant to an audience in Latin America or the Middle East. Not only can we prevent insensitivity by including this detail in our prompt. We can make our campaign messaging more relatable and impactful. Furthermore, AI-powered language translation and interpretation tools break down language barriers. They enable human rights defenders to communicate with diverse populations and share their messages across global networks.

The Principle of “Do no Harm”

Warning! Even when we give specific context to optimize the AI generated output, it is critical to still use caution and careful editing. Tools like ChatGPT are only accurate up to their most recent training data. This means the tool could give information that is factually incorrect or subtly misleading. For example, if ChatGPT is only accurate up until its most recent training data of September 2021, it wouldn’t know who won the FIFA Women’s World Cup in 2023. We encourage users in human rights-based fields to verify accuracy as you would with any source that you share.

A core principle of the New Tactics methodology is “Do No Harm.” Activists must be sure we are not entering information into an AI prompt that could potentially compromise the safety or the security of ourselves, our staff, or other vulnerable populations. We encourage activists and HRDs to remember what experts like Meredith Whittaker, President of Signal, have said: AI itself is surveilling technology. Aside from being used to retrain AI algorithms, it’s unclear what happens with this data. Activists and HRDs should use caution when entering prompts to not include any personal information or sensitive data. At this point, as the technology is still emerging, there is not adequate regulation and/or transparency to ensure public deployment adheres to the minimum ethical standards set by GDPR and the EU AI Act.

AI for Improved Workflow: Automating Repetitive Tasks

AI-driven automation can streamline administrative and logistical tasks, enabling activists to allocate more time and energy to strategic and tactical planning, or rest and creativity. Here are some examples of how AI platforms can automate non-preferred or repetitive tasks in human rights work:

- AI for Generating Email Responses: Compose AI is a Gmail plug-in that can draft messages directly in your email application. You can use the same prompt engineering tips described above to generate the best message. By utilizing a tool like this, activists and HRDs can quickly respond to more monotonous tasks. This frees up time for resilience practices or creative work.

- AI for Understanding Long Text: In working environments where reading and research time is limited, Chat GPT can summarize an article. It can rewrite information in simpler terms. The Gimme Summary plug-in is a free Google Chrome extension that can give an automatic summary of an article. ChatPDF.com can extract information from PDFs you upload (manuals, books, essays), allowing you to effectively “chat” with any PDF up to 120 pages.

- AI for Project Management: ChatGPT can propose training agendas, schedules, process maps, budgets, etc. which could be used as a starting point for planning events or projects. The Show Me Diagrams plug-in for ChatGPT allows users to turn a written process into a visual diagram. For example, it could “create a visualization of my campaign planning process.”

- AI for Creating Presentations: The SlidesAI.io add-on for Google Slides can transform any text you give it into visually appealing slides in a variety of types and color palettes. Again, tools like this could save activists and HRDs valuable time that could be spent elsewhere in their important work. There are a number of similar applications for beautifying slides and PowerPoints.

- AI for Audio Transcription: There are a variety of applications that automate audio or video transcription technologies such as Otter and Whisper. These can save time in transcribing videos or podcasts. They can help you make your content more easily accessible in a variety of formats.

- AI Chatbots as a First Point of Contact: AI-powered chatbots can engage with supporters and stakeholders, addressing FAQs and answering initial inquiries. Innovations like this could help understaffed and low-resource organizations more effectively connect with volunteers or donors. For example, a chatbot on a nonprofit’s website could be trained to respond to common queries like “how do I make a donation?” or “I want to volunteer” with the appropriate resource or contact form. This frees up capacity for staff to respond to more complex inquiries.

“Always Keep a Human In the Loop”

There are few tasks you won’t find an AI tool for. It’s important that each human rights-based organization individually decide how it will approach the use of AI in its work. Here’s a sample policy from Code Hope Labs that could be helpful for organizations who are approaching this conversation.

Our advice: always keep a human in the loop. Don’t build workflows that are entirely dependent on unreliable technology. Instead, use these tools strategically to free up your capacity to pursue more urgent tactical action.

Users should also take caution to not become too reliant on these kinds of tools. Tools like ChatGPT may eventually live behind a paywall, even further exacerbating issues of access. At best, the free version does mediocre work. It is no substitute for the vast expertise and lived experience of human rights activists and HRDs.

AI as a Tactic Used in Political Satire

As the capabilities of AI continue to expand, the potential for even more sophisticated and creative forms of political satire grows. The use of humor, satire, or parody can promote critical discourse around the absurdity and consequences of policy decisions.

An example of AI generated satire can be found in the way one group generated mock scenarios in which world leaders were represented as refugees or low-wage workers. No doubt this tactic is controversial – but it succeeds in capturing public attention. It sheds light on policies that affect marginalized populations.

Suella Braverman, a British politician pictured as a refugee in an AI-generated image meant to criticize her harsh immigration policies

The fusion of humor and protest has long been a catalyst for social change. The question is whether AI could serve as a new and innovative tactic to provoke critical discourse. OR whether the creation of “deepfake” imagery should be avoided altogether. Again, we recommend that each individual organization or campaign determine whether the benefits of this tactic outweigh the risk in your own unique circumstances. From there, you can set expectations for responsible tactical action.

AI in Human Rights Work: A Cautionary Tale

Humanitarian and development workers have long used new technologies to document abuses and assist people in need. Whether using cell phone video to document instances of police brutality or drones and satellite footage to assess natural disasters from afar, we can’t deny that technical innovation has improved the ways we work. However, the nature of these technologies and their ability to collect and analyze data is rapidly evolving. AI can swiftly process vast amounts of data, extracting meaningful patterns and insights that would take humans considerably longer to uncover. Activists can leverage AI to analyze human rights abuses, track social injustices, and identify systemic discrimination. AI can provide them with a robust evidentiary foundation for their advocacy campaigns.

While the potential of these tools is exciting, it is equally important to recognize that we do not yet know all of the potential harms and pitfalls. How can we balance the positive benefits of this new technology with its potential risks?

It is unclear whether the same technologies that are transforming various aspects of our commercial and social life also have the potential to address human suffering, empowerment, and justice.

Marc Latonero and Zara Rahman on Data, Human Rights, and Human Security

Use Caution as AI Becomes Ubiquitous

As more and more AI tools become embedded in the softwares many of us use daily like Google, Microsoft, and Adobe, it becomes more and more important that we learn how to incorporate these tools wisely and only when appropriate. Some human rights-based campaigns may rely heavily on AI, while other organizations or individual activists may decide that AI use is not encouraged at all. While the potential benefits of AI in activism are significant, it is crucial for activists and HRDs to approach these technologies with caution, taking the time to understand their capabilities, limitations, and potential risks.

For more resources on AI in human rights, check out the work of Witness, Social Movement Technologies, and the Distributed Artificial Intelligence Research Institute (DAIR), as well as the articles listed below.

Resources: